- News

- Reviews

- Bikes

- Accessories

- Accessories - misc

- Computer mounts

- Bags

- Bar ends

- Bike bags & cases

- Bottle cages

- Bottles

- Cameras

- Car racks

- Child seats

- Computers

- Glasses

- GPS units

- Helmets

- Lights - front

- Lights - rear

- Lights - sets

- Locks

- Mirrors

- Mudguards

- Racks

- Pumps & CO2 inflators

- Puncture kits

- Reflectives

- Smart watches

- Stands and racks

- Trailers

- Clothing

- Components

- Bar tape & grips

- Bottom brackets

- Brake & gear cables

- Brake & STI levers

- Brake pads & spares

- Brakes

- Cassettes & freewheels

- Chains

- Chainsets & chainrings

- Derailleurs - front

- Derailleurs - rear

- Forks

- Gear levers & shifters

- Groupsets

- Handlebars & extensions

- Headsets

- Hubs

- Inner tubes

- Pedals

- Quick releases & skewers

- Saddles

- Seatposts

- Stems

- Wheels

- Tyres

- Health, fitness and nutrition

- Tools and workshop

- Miscellaneous

- Buyers Guides

- Features

- Forum

- Recommends

- Podcast

feature

Trek Madone 2016 action - 36

Trek Madone 2016 action - 36Should you buy an aero road helmet? How much faster could you be?

Is it worth spending your hard-earned cash on an aero road helmet? We're going to check out everything that might influence your decision.

It’s all about the aggregation of marginal gains in cycling these days. You know the theory: make a small gain in everything you do and all those small gains could add up to become a winning margin.

But just how marginal is the gain you can get by swapping from a standard bike helmet to an aero road helmet?

The claims

Brands often make claims about the amount of time you’ll save by switching to their aero helmet.

Smith, for example, says that its Overtake helmet will save you about 25 seconds compared to a non-aero Giro Aeon over 40km (25 miles) at 40km/h (25mph) (Smith presumably means that a rider wearing the Giro Aeon would be going slightly slower than 40km/h at the same power output as an identical rider in an Overtake doing 40km/h).

Louis Garneau, on the other hand, released a paper when it launched its Course helmet in which it said that if a 70kg rider on a 9.1kg bike covered 40km in 53:20mins wearing a regular road helmet (stick with us!), the same rider would cover the distance in 51:22mins in an (unnamed) aero road helmet (presumably at the same power output), and in 50:40mins in the Louis Garneau Course.

In other words, according to Louis Garneau, swapping from a regular road helmet to the Course would save that rider 2:40mins in that scenario. That’s a huge chunk of time!

Specialized has what it calls its Win Tunnel – the company’s own wind tunnel at its California HQ. You might have seen its short videos that cover things like whether having a beard or shaved arms has an aero effect.

Specialized compared the performance of its Prevail standard road helmet, Evade aero road helmet (below) and S-Works TT helmet in the Win Tunnel and concluded that switching from the Prevail to the Evade would save you 40 seconds over 40km (25 miles).

Switching from the Evade to the S-Works TT helmet would save you another 20secs, but you’re not going to be using one of those for standard road riding/racing.

This testing was done on a time trial bike. The results would probably have been a little different on a road bike. In its sales literature, Specialized actually claims that the Evade would save you 46secs over 40km compared to a standard road helmet.

At what speed? Watch this video to find our why Specialized believes that the time will be more or less the same whatever the rider’s speed.

Specialized also says that a pro putting out 1,000 watts in a 200m sprint while wearing the Evade will finish 2.6m ahead of a rider in a Prevail putting out the same power.

The cynical among you might think that Specialized has an interest in exaggerating the aero performance of the Evade, but the S-Works Prevail and the S-Works Evade cost exactly the same – £159.99 – so there’s probably no benefit in the brand promoting one over the other.

And in the real world

Our man Dave Atkinson took the Giant Rivet aero helmet to road.cc’s local cycling circuit in order to find out whether wearing it would add speed compared to using a standard road helmet.

Over to Dave:

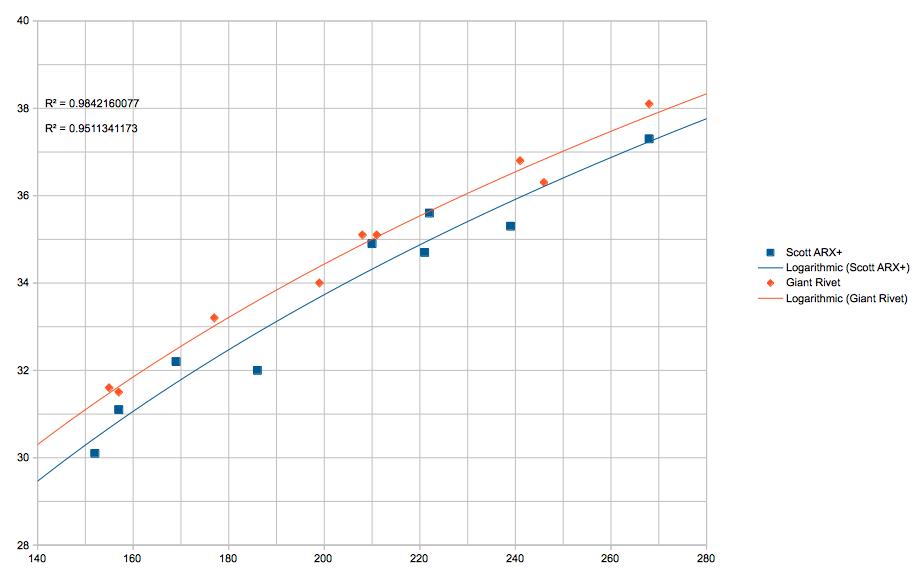

“This is a graph of my laps of Odd Down circuit wearing the Giant Rivet and a standard helmet for comparison, a Scott ARX Plus. I was using a set of Garmin Vector 2 pedals to measure my power, and the power spread is from an easy 150W average for the 1.5km lap up to about 270W average, which for me is going pretty hard. For reference, that's a fastest lap of 2:22, which is just under 4th cat race pace (2:18 on Saturday, when conditions were similar).

The graph shows power (watts) on the X (horizontal) axis and km/h on the Y (vertical) axis.

“What do the numbers say? Well, the trend line for the Rivet is above the trend line for the Scott, and depending on where you measure the offset you're looking at just under or just over 10W of difference. Put another way, 2-3 seconds a lap.

“It's not a rigorously scientific test and there are always going to be other variables at play in an outdoor environment, but it does suggest there's a measurable difference in the real world. Every little helps, right?"

Ventilation

Many aero helmets in the past have lacked ventilation. Manufacturers have made the shell smooth in order to minimise drag, keeping air out in the process. The result is that you end up uncomfortable.

When Ash reviewed the BBB Tithon, for example, he said that the ventilation wasn't spectacular, and Dave said that Bolle's The One helmet was too hot when all the vents are covered (it's adjustable).

However, many of the latest generation aero road helmets come with good venting. The Giro Synthe (above), for example, the Kask Protone, and the Louis Garneau Course all feel very similar to standard road helmets in terms of the amount of air that gets to your head.

When designing its new Ballista aero road helmet, Bontrager says that it created a thermal head form to evaluate the thermal efficiency of different helmets. The head form was covered with 36 thermal couples to determine the cooling properties of various designs and allow Bontrager to shape and position the vents most effectively.

As a result, the Ballista ended up with three vents in the front-centre, shaped to draw in air. Internal recessed channels are intended to manage airflow through the helmet and over the head, and exit ports at the back are designed to allow the air to escape easily so as not to increase drag.

Despite looking like it lacks ventilation, the Ballista feels cool in use, not too dissimilar to a normal road helmet.

Another option is to go with the Bell Star Pro which has a slider (above) in the top that allows you to open the vents for more cooling, and close them for improved aerodynamics.

Lazer takes a different approach with the Z1 helmet that we've reviewed here on road.cc (and others in the range). The Belgian brand allows you to use an Aeroshell (above) that can be either left off or added depending on whether you're prioritising cooling or aerodynamics (or protection from the weather conditions) on any given ride.

Kask's Infinity is similar in that you get easy ventilation adjustment via a central sliding panel. It's easy to slide the cover back and forth with one hand on the move, though there is no small lever as there is on the Bell helmet.

Cannondale does something similar with its Cypher Aero.

As a rule, aero road helmets are a little warmer than standard helmets. If you suffer with a hot head when riding or if it's a very hot day you might want to steer clear, but you’ll probably find a lot of the aero road helmets out there these days are comfortable in most conditions.

Weight

Here’s a quick roundup of the weights of the aero road helmets we’ve reviewed on road.cc (these are weights according to our scales rather than manufacturers' claimed weights).

| Helmet | Typical price | Weight |

|---|---|---|

| Limar Air Speed Road | £143.50 | 259g |

| Spiuk Korben | £66.99 | 257g |

| Bontrager XXX WaveCel | £198.99 | 361g |

| Lazer Bullet 2.0 | £169.95 | 359g |

| Abus AirBreaker | £165.94 | 214g |

| Kali Tava Flow | £193.59 | 272g |

| Bell Z20 Aero MIPS | £110.99 | 279g |

| Sweet Protection Falconer MIPS | £168.95-£200.47 | 291g |

| Specialized S-Works Evade II | £230.00 | 254g |

| HJC Furion 2.0 | ~£160.00 | 217g |

| MET Trenta 3K Carbon | £148.49 | 220g |

| Giro Vanquish MIPS | £135.00 | 367g |

| Abus GameChanger | £119.00 | 272g |

| Bontrager Velocis MIPS | £129.00 | 279g |

| Uvex EDAero | £118.55 | 318g |

| Met Manta | £114.99 | 215g |

| Met Strale | £60.00-£79.99 | 241g |

| Bontrager Ballista | £129.99 | 266g |

| Giro Synthe | £89.99 - £188.99 | 223g |

| Kask Protone | £99.00-£149.00 | 250g |

| Bell Star Pro | £178.49 | 300g |

| Spiuk Obuss | ~£110.00 | 307g |

| BBB Tithon | £62.99 | 280g |

| Bolle The One | £82.94-£155.99 | 340g |

| Kask Infinity | £109.00 | 265g |

| Giant Rivet | £60.00 | 299g |

| Specialized Airnet | £125.00 | 288g |

| Ekoi Corsa Light + shell | £57.61 | 216g |

| Scott Cadence Plus Mips | £184.97 | 346g |

| Smith Overtake Mips | £69.99 - £119.99 | 284g |

Giant claims a weight of under 250g for the new Pursuit aero helmet that we first saw at the Tour de France.

Aero road helmets do tend to be a little heavier than standard helmets of a similar price, but not by much and there are exceptions.

Price

Aero road helmets haven’t tended to be cheap in the past. The Kask Infinity is £220, for example, the Giro Synthe is £199.99 and, as mentioned above, Specialized’s S-Works Evade II is £240.

However, there are less expensive options out there these days. The Bontrager Ballista is cheaper than most at £129.99, the Spiuk Obuss is £99.95, and the BBB Tithon is £79.95.

Safety

Aero road helmets are made to meet the same safety standards as standard helmets .

So, should I buy an aero helmet?

Most aero road helmets are a little heavier and a little less vented than standard helmets, and they tend to be a little more expensive.

On the flipside, you’ll to get a small reduction in drag. It’s a marginal gain but it might just make an important difference if you intend to go off the front and try your luck in a race.

Check out all of our helmet reviews here. For reviews on individual aero helmets, scroll up to the Weight section above and click on each model name.

Mat has been in cycling media since 1996, on titles including BikeRadar, Total Bike, Total Mountain Bike, What Mountain Bike and Mountain Biking UK, and he has been editor of 220 Triathlon and Cycling Plus. Mat has been road.cc technical editor for over a decade, testing bikes, fettling the latest kit, and trying out the most up-to-the-minute clothing. He has won his category in Ironman UK 70.3 and finished on the podium in both marathons he has run. Mat is a Cambridge graduate who did a post-grad in magazine journalism, and he is a winner of the Cycling Media Award for Specialist Online Writer. Now over 50, he's riding road and gravel bikes most days for fun and fitness rather than training for competitions.

Latest Comments

- chrisonabike 41 min 25 sec ago

I'd agree there are a lot people who want no change whatsoever (or rather just don't think about change, busy getting on with life). Until...

- David9694 1 hour 43 min ago

I like how drivers make the case for making monitoring covert, not overt.

- andystow 1 hour 55 min ago

She seemed a bit upset about a minor misjudgement that could have killed someone and is going to cause months of stress and inconvenience.

- Backladder 2 hours 58 sec ago

No we don't agree, personally I would take primary, even if only for a short period although I can understand why some riders might not want to...

- brooksby 2 hours 22 min ago

And which looks very like the bag produced by indy bag manufacturer Wizard Works…...

- HoarseMann 2 hours 33 min ago

If it was a 'no vehicles' sign (all white circle centre), it would mean cyclists could ride in the hours that HGVs and disabled drivers are allowed...

- stonojnr 4 hours 3 min ago

The bit at the start and end looks like a cycle lane, but the bit they drive on looks just like a patched filter lane for traffic lights.

- JOHN5880 4 hours 4 min ago

Hey, you 4 execs helped run a company into the ground and have no jobs anymore... Come work for us because you have great experience in the...

- Oakhambike 4 hours 48 min ago

£11.5k for a bike weighing over 8kg that's 2 fingers to customers let alone UCI

- Dnnnnnn 5 hours 4 min ago

I'm going to show my bike a picture of this shed and tell it, "If you don't behave..."

Add new comment

54 comments

Nothing a simple caveat doesn't resolve.

Some major problems with this view. The first is the obvious one, even manufacturers with wind tunnels can have holes picked in their studies. The second is that you're advocating a race to the bottom, which we have already, hence the predictable, copy-paste reviews. And thirdly, without any testing, you don't discover problems, which you can then go on and refer back to the manufacturer, or carry out more in-depth analysis.

More testing, and improve the quality of it incrementally. If sites like road.cc had focused on testing since their inception, they'd be far more thorough.

Nice one guys for this article. Credit where credit's due.

Okay so how would you do it? Variables - power meter and a loop course knock out placebo effect and weather. What other variables are there for a real life regression analysis? Willing listener here - please design me a test and a standard to validate these claims.

Put a robot in it, pin it to 200w on a flat indoor track maybe. Or run a thousand sample test. Is that science enough? But then we couldn't afford the damn things for the R&D cost. It's a lid, it works to an effect.

He's all mixed up. He doesn't see the difference between essential controlled lab testing that a manufacturer should do, and real world testing to see if on-the-road experience correlates to the manufacturer's resultant claims.

All review sites need to do is sincerely head out, as Mat and Dave have here, and get some data points and we can see for ourselves if things stack up.

I'm definitely with Mo on this one. You can't use "real world testing" as a synonym for "half-baked testing".

The fact of the matter is, without error bars on your set of data noone knows how rigorous the testing protocol is. It could very easily be the case that if Dave were to head out and do that exact same test again he'd get completely opposite results because the variability in the testing protocol massively outweighs the effect that he's trying to measure!

Scientific methodology and statistical analyses exist for a reason and that is to ensure that the conclusions drawn are valid and supported by the data.

I agree, I'd love to see road.cc do more of this kind of thing, but the reality is, if it's not done properly then it's hardly worth doing at all.

Which parts of their testing were 'half-baked'. At what point did it deviate from the scientific method?

Well, for obvious editorial reasons there are precious few details on the experimental protocol or resulting data set, so your guess is as good as mine; all I can say is that the graph presented does not support the claim that "there's a measurable difference in the real world", it says that you've measured difference. To prove that the helmet was the cause of that difference is a very different prospect.

If you want to know more about the kind of experimental rigourousness that you have to employ to actually get meaningful data out of a test like this, have a read of this thread and ideally give it a go yourself.

http://forum.slowtwitch.com/forum/Slowtwitch_Forums_C1/Triathlon_Forum_F...

Sorry, I wanted to know where the author deviated from the scientific method. Can you point out exactly where that was?

As stated above, in drawing a conclusion that is not supported by the data.

In your view. In my view, it does.

That's a difference in opinion when drawing a conculsion which happens even in rigourous studies.

The actual method here was scientific, unless you can point to a deviation, which I'm still waiting for.

I'm not sure your view would be upheld by many people with even a cursory knowledge of statistics, but that's your prerogative.

Science doesn't have much room for opinion, it's usually just the difference between those people who understand the data and those who don't.

As stated previously, there is little information about the testing protocol used, but what has been presented does not support the conclusions drawn.

Science doesn't have much room for opinion, it's usually just the difference between those people who understand the data and those who don't.

[/quote]

ROFLMAO

Let me introduce you to a little tome called "This thing called Science" by a Mr Chalmers. You may find it enlightening.

Science is almost exclusively opinion. Mathematics is not. Statistics largely is.

"Matter is divided up into atoms, which are indivisible" JJ Thompson. That was his opinion based on sound evidence until he measured the mass of cathode ray (particles) at less than .05% the mass of a hydrogen atom.

Currently we know of 19 sub atomic particles.

All data is interpreted which is then presented as an opinion.

Any scientist who says they have irrefutable evidence, is not a scientist. No scientist knows anything for sure. They just have their opinion based on the available data.

[/quote]

ROFLMAO

Let me introduce you to a little tome called "This thing called Science" by a Mr Chalmers. You may find it enlightening.

Science is almost exclusively opinion. Mathematics is not. Statistics largely is.

[/quote]

You misunderstand how science works. Unlike religion, science makes no statements of 'truth'. Unlike opinions, there is little room for subjectivity in science. What there is, is evolution of the scientific consensus, propelled by newer experimental results and by better theories to interpret them.

By the way, newer scientific theories generally do not 'disprove' the older ones but rather extend them to a higher degree of generality, e.g. Einstein's relativity did not prove Newton was 'wrong', only that newtonian mechanics is a special case valid at lower speeds.

I think TeamExtreme summed up nicely what I was trying to say: it's not that I wouldn't love to see more scientific experiments on these topics, it's just that the overhead is probably too high. A semi-scientific method does not result in semi-significant results, it just results in no significance at all.

Again, things like randomization are important to look out for, but also, in this case, the power output of the rider. Given that the power-to-speed-relationship is not linear, an average power output does not tell you anything about the lap time, i.e., even under exactly the same test conditions and for the same average power output, you may end up with different lap times. How do you separate that from the aero-effect?

Talking about non-linear relationships, most riders will know that aero-efficiency is more important at higher speeds since the drag increases exponentially. However, you don't see that in the test results. According to the regression fit, the (absolute) savings are the same at high and low speeds, which does not indicate that the differences are caused by aerodynamic effects. Your absolute savings should be higher at higher speeds. I think if the author tries to perform an experiement in the field of aerodynamics, he/she should at least comment on things like that.

What is semi-scientific? You accused the author of pseudo science earlier, but talk in pseudo science. None of these critcisms are profound in the slightest and are all addressed in lab testing.

It's odd to me that you can't discern between a rigorous lab test and a real world test of a review site. A real world test merely has to follow the scientific method, and this test has.

It will be as accurate as it is rigorous. It isn't very rigorous, as the author points out, so won't be very accurate. It simply gives us a data point that confirms manufacturers lab testing.

Trying to take down a reviewer for doing a quick real world test based on lab standards is irrational, and the mark of a pseud.

I think this sort of report is great, and just the sort of thing Road.cc should be doing more of. The real-world data aquired by Dave is the most scientific and useful of all the tests shown, as it shows measureable difference for an actual rider doing typical speeds. He's using a power meter, so it doesn't matter whether he knows which helmet he's using, as long as he's not changing his body position with the helmet, which seems unlikely. If they could get 3-4 riders doing this sort of test, to clock up 100 or so laps, they could have some real confidence in the effect, and the info would be very useful when it comes to choosing new kit.

How about doing this test with a team of 3-4 riders of different weights, for all reviewed kit which claims to offer a speed advantage: wheels, frames, skinsuits, as well as helmets? It would only be 1-2 hrs on the circuit with a bunch of you, swapping the kit over and recording what kit each of you is using for each of the laps, and you'd have the most convincing and useful info for prospective buyers on all of the internet?

I would suggest that one of the real issues with "real world testing" is that it isn't enough of a controlled enviroment to justify the results with any statistical significance.

Computational Fluid Dynamics would yeild results which could more accurately compare the difference in helmets; however, displaying results in terms of Drag Co-efficient means SFA to us when we're out on the road.

So I guess it puts reporters in a lose-lose situation, either do a statistically insignificant test which produces a fancy curve in terms of Watts and M/Kph (meaningful to us as cyclists) or do a controlled test which produces statistically significant results in terms of Drag Co-efficient (not very useful unless you fancy using the Navier-Stokes and Drag Co-efficient equations to derive forces and therefore power etc).

I'm soon to be moving to Edinburgh and have been considering for some time now whether to invest in an aero helmet, not for its alleged speed benefits but to keep my heid warm. Has anyone got any experience of helmets like the Lazer? Do they indeed keep your head warm in cold/wet weather or do you just end up sweating profusely underneath it?

No experience there, but consider adding a thin merino beanie and a windproof headband (to protect your ears from sub zero wind chill!) to your kit.

A mate had a lazer with the aero shield and did find it too hot and sweaty, he changed to a kask becuase of it.

+1

I have the cover for a lazer helmet. As it only cost me an extra £16 to 'aero' my existing helmet, its not bad.... but it is hot. Fine for crits and TT's, but everyday use, or use in the summer, probably not suitable.

If you've already got that helmet, the shell add on is worth it. But to buy from scratch i'd look elsewhere.

I got a Giro Air Attack for exactly this reason. It really does cut down on the horrible brain freeze you get on really cold days. It's not been too warm though, which is quite surprising.

One thing that the article doesn't mention is that the Air Attack is noticeably quieter in terms of wind noise compared to my very vented other helmet. It really helps when keeping an ear out for traffic.

Similarly for me with Kask Infinity. I wear it on cold days and will open/close the vents according to my head temperature. It does make a difference.

Strangely, I've never felt too hot in it on warm days.

That was my thinking too - bought a aero helmet in the sales as my winter helmet. First time I wore it I got ice cream head from the cold wind whistling through ! So my thinking was pretty flawed. It seems as ventilated as my normal road helmet. That I was meant to switch to in Summer but somehow never got round to.

The lack of vents will at least keep the snow off though - but don't bank on it being noticeably warmer than normal helmets.

In deepest coldest winter I go two buffs - one under the hat and over the ears. The other round the neck and up over the jaw if needs be.

Got a Giro Synthe yesterday, weighed 249g in a size M.

Actually feels really heavy to me when on, maybe because my other hat is a Prolight around 169g.

Get used to it I guess

Pages