- News

- Reviews

- Bikes

- Components

- Bar tape & grips

- Bottom brackets

- Brake & gear cables

- Brake & STI levers

- Brake pads & spares

- Brakes

- Cassettes & freewheels

- Chains

- Chainsets & chainrings

- Derailleurs - front

- Derailleurs - rear

- Forks

- Gear levers & shifters

- Groupsets

- Handlebars & extensions

- Headsets

- Hubs

- Inner tubes

- Pedals

- Quick releases & skewers

- Saddles

- Seatposts

- Stems

- Wheels

- Tyres

- Tubeless valves

- Accessories

- Accessories - misc

- Computer mounts

- Bags

- Bar ends

- Bike bags & cases

- Bottle cages

- Bottles

- Cameras

- Car racks

- Child seats

- Computers

- Glasses

- GPS units

- Helmets

- Lights - front

- Lights - rear

- Lights - sets

- Locks

- Mirrors

- Mudguards

- Racks

- Pumps & CO2 inflators

- Puncture kits

- Reflectives

- Smart watches

- Stands and racks

- Trailers

- Clothing

- Health, fitness and nutrition

- Tools and workshop

- Miscellaneous

- Buyers Guides

- Features

- Forum

- Recommends

- Podcast

news

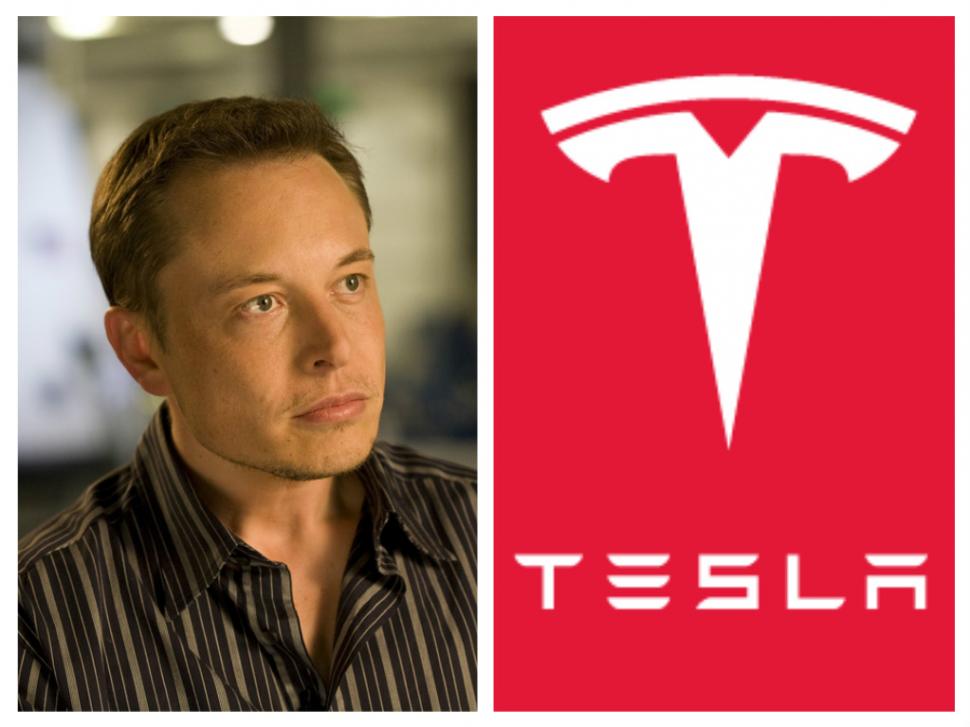

Elon Musk - image via Flickr user OnInnovation - and Tesla logo.jpg

Elon Musk - image via Flickr user OnInnovation - and Tesla logo.jpgIt would be "morally reprehensible" to delay the release of autonomous vehicles - says Elon Musk

Elon Musk, the founder of electric automobile and storage company Tesla Motors, said that it would be "morally reprehensible" to delay the release of partially autonomous vehicles.

The PayPal and solar power company SolarCity founder released his "Master Plan, Part Deux" this week which laid out his plans for the coming decade in regards to the growth of electric and autonomous vehicles.

In the statement he talked about the spread of autonomous vehicle technology across the entire Tesla range, how safe autonomous technology - even at its partial level available to current Tesla owners - is, and how it would be outrageous not to implement these technologies in fear of bad press of legal liability.

Given that the first road death in a Tesla while it was in semi-autonomous autopilot mode occurred in May, Musk's comments are particularly poignant.

It later became apparent that the driver was not abiding by Tesla's guidelines for operating a vehicle in semi-autonomous mode, nonetheless, question marks were raised over the safety of such vehicles - especially for vulnerable road users.

Therefore, Musk's comments that all Teslas will soon have the capacity to be fully self-driving could be worrying for some road users.

He said: "All Tesla vehicles will have the hardware necessary to be fully self-driving with fail-operational capability, meaning that any given system in the car could break and your car will still drive itself safely."

Despite those fears, Musk appears to back autonomous technology to the hilt while defending what appears to be a system in its infancy.

"It is important to emphasize that refinement and validation of the software will take much longer than putting in place the cameras, radar, sonar and computing hardware," he wrote.

"Even once the software is highly refined and far better than the average human driver, there will still be a significant time gap, varying widely by jurisdiction, before true self-driving is approved by regulators."

He suggested that it will take somewhere within the realm of 6 billion miles of testing before regulatory bodies are ready to accept the technology. Current testing is happening at just over 3 million miles per day.

Those regulatory restrictions don't limit the use of partial autonomy though, which is why it was possible for a driver to have died while using the autopilot feature.

Even in the face of such a tragedy, Musk remained that it would be "morally reprehensible" not to implement the technology.

"The most important reason [for deploying partial autonomy now is] that, when used correctly, it is already significantly safer than a person driving by themselves," he wrote."

"It would therefore be morally reprehensible to delay release simply for fear of bad press or some mercantile calculation of legal liability."

Musk goes on to say that according to te National Highway Traffic Safety Administration's 2015 report, deaths involving on-road vehicles increased by 8% - to one every 89 million miles. Musk claims that his autopilot technology will soon exceed twice that number, and the system is getting better every day.

He goes on to say it would make as much sense to turn off Tesla Autopilot as "it would to disable autopilot in aircraft, after which our system is named."

In regards to cyclists, Tesla has been far less vocal than other autonomous vehicle researchers.

Google's autonomous vehicle concept was designed with vulnerable road user safety in mind - with foam bumpers to soften any unlikely impacts - while they've also patented sticky bumper technology to reduce the chance of extra impacts.

Google has also been vocal about its software which has been programmed to pay special attention to cyclists, and to recognise commonly used hand signals.

Meanwhile Renault's chief executive took a swipe at cyclists, calling them "one of the biggest problems" for driverless cars.

Tesla's roadmap of an autonomous vehicle future may be light details that will reassure vulnerable road users, but at least the American company isn't actively antagonising them.

Latest Comments

- froze 3 hours 35 min ago

Replace those flimsy shutters with steel security bars, or they could use both together with the security bars on the outside of the shutters. ...

- lonpfrb 4 hours 10 min ago

Glad to see they haven't lost the plot on sustainably, oh, never mind...

- Rendel Harris 6 hours 2 min ago

She may have a disability and have the car through Motability. She may have recently lost her job and the car dates from more prosperous times. For...

- bensynnock 6 hours 16 min ago

Southampton?

- TBR 6 hours 36 min ago

I got myself a klickfix freepack sport with the seatpost mount about 15 years ago which does exactly the same with more distance between saddle...

- wtjs 7 hours 31 min ago

Traffic lights should routinely be programmed to turn red where sensors show speeding drivers are approaching...

- David9694 7 hours 57 min ago

Mum shocked as driver crashes into Helston house in the night...

- Rendel Harris 8 hours 12 min ago

By my reckoning there are eight comments from cyclists who don't think it's necessary, five comments from cyclists who think it's good, four...

- Rendel Harris 8 hours 37 min ago

Well obviously they couldn't touch the central line because there was traffic coming in the opposite direction and so they had to pass you within...

- David9694 8 hours 38 min ago

What are left with, from among the individual builders listed here I wonder, how many are taking orders? https://www.framebuilding.com/custom_uk...

Add new comment

34 comments

Billionaire says its morally reprehensible to delay release of self autonomous vehciles, which he just happen to make lots of money from, but will do nothing to resolve congestion.

Sounds plausible.

Congestion is but one of many problems. It's not Musk's job to solve every issue on the planet. He's doing his bit. Are you? Simply riding bikes doesn't make us any better than Musk who's investing his time in to reducing emissions and making travel safer.

Did I say it was?

Dont be so disingenuous. He isnt 'doing his bit' at all. He is a businessman that is selling his product line, which happens to be electric cars. Now with a new self autonomous driving feature.

He is not pushing a clean mass transist system that will reduce pollution, congestion and travel times. He is selling a luxury line of electric cars that will do nothing to reduce any of the above.

It can also easily be argued that electric cars just displace their environmental impact anyway.

I guess you just pulled that out of your arse along with the rest of your comment?

Oh no, internet tough guy act.

You got called out and have nothing to come back with other than weak snipes and ironic use of the word disingenious.

To recap, Musk is doing his bit by pushing electric, autonomous vehicles, legalising them, and being a billionaire selling his product line doesn't change that (logic and all). And rabid internet guys here is doing sweet FA, bar whinging about him not solving congestion.

Go make a billy, come back and solve congestion, and I'll give you a cookie. Posh one from Waitrose.

By the same logic, it is "morally reprehensible" that public transport hasn't become universal, joined-up and priced at cost. That is a solution to the danger of letting every idiot and egomaniac pilot their own deadly vehicle which has been viable for a century or more (arguably more so in the past than in the car-centric society we have now.) Of course, you'd have to pay lots of people to be professionally and continuously trained to operate trains and buses, and to be really efficient and cheap would have to be state-run, so it isn't likely to be as lucrative as developing software once and selling it millions of times.

I'm not impressed by Musk's rather self-serving moralism.

I was reading in Scientific American over the weekend that we are still at least a decade (or more ) away from a truly fully autonomous verhicle. They simply don't work in a number of driving conditions such as snow. The best offering so far is high not full automation. The problem with these semi-automomous verhicles are that we humans get complacent, like the guy that was killed in his Tesla. For instance, when Google was testing its autonomous vehicles they told their (human) drivers that they must be alert and ready to take control of the car at all times. In one incident they found that their driver climbed into the back of the car while it was cruising on the highway to grab some paperwork he left in his bag. Driver attention (or the lack there of) is the biggest challenge to the automotive industry as it edges ever closer to fully autonomous vehicles.

The incident with the Tesla driver who was killed is not really a fair example. The lorry driver pulled out on him and chances are that even if you'd been fully paying attention as a driver you'd have still driven into it and died.

I think the direction of travel here is pretty clear and inevitable. Ultimately it will be safer and for most people freedom they want from cars is to go where they want, when they want; this is not impacted by autonomous cars.

What I think will also follow is the reduction in personal ownership of cars - for most of us a car is an expensive item that is unused for the vast majority of it's life. If I can quickly order a car (of the appropriate size) to turn-up at my door and take me to where I need to do that's perfect. The [electric] car can also go off and get it'self charged when needed.

Personally I'm all in favour - this year I'll cycle about 5000 miles and drive around 3000 and I think both will be safer and more enjoyable in the future.

Won't this technology kill the car insurance industry? Surely the owner won't need to be insured, it would be the manufacturer as any accident will be tech failure?

Big legal debate at the moment. For a while no because you'll be considered the supervisor and still in control, but looking past the transitional phase, which have no doubt we are just about to enter, then I'm not sure. Looks more like product liability when you enter the realm of (actual) fully autonomous vehicles. That could sink traditional insurance and I'm sure they have their shill working overtime to ensure that the element of personal responsibility remains in personal transport for as long as possible..

I live in SF, USA most of the time. There's a high concentration of teslas there.

I commute by bike 5 days a week there. Every ride I take there's at least one car doing crap (and a zillion cyclist that do much worse, to be honest - cyclists are pretty bad here). Every single ride though, a car (or more) will do something stupid that puts me in more danger than normal and that is also against the law.

Once, in the past 6mo, I had a tesla driver cut me over just before an intersection.

Now, I know tesla autopilot is not rated to detect cyclists. However, the car went close enough to be in range of the lidars and it instantly weaved with no driver input (i always look at the tires alignment with pavement and the driver through the glass in order to anticipate whatever fuckery is going to come at me that day).

While the car did not save my life ( i figured that driver was going to cut me off a few seconds before), it was really conformting to see it weave like that. I cannot wait until all cars have such autopilots. It absolutely brillant. Made my day.

No Lidar on the Tesla, that will be triggering ultrasonic sensors which work within a few metres to prevent collisions. I make the distinction for an important reason.

The focus within the auto industry is on self driving, because it's a revolutionary features and is likely to be extremely disruptive (see the many discussions about car as a service). However, if the focus is simply safety first, you don't need an autonomous car and it's a considerably cheaper and simpler project. I'd make a bet we could cut 80% of all road collisions, using nothing but ultrasonics and a forward facing camera or radar, a combination that costs just a few percent of a Lidar sensor.

But the only way to do that is through Gov't regulation, because as a feature self driving is much easier to sell than a "this car won't let you drive like an arsehole and kill people" package, even if the latter is much cheaper.

Except it's not, because car manufacturers have been introducing "arsehole prevention" features for decades. Some are fairly clear and dramatic: antilock brakes, parking sensors, antiwheel spin systems; some are less so: automatic lights and wipers. There will be more, they will become progressively cleverer, more expected (can you even buy a new car without antilock brakes now?) and take more control from the human while each one individually does only one little thing. That's the desirable way to sell what cumulatively adds up to an almost autonomous car.

Screw self driving cars. If I want to do a burnout at the traffic lights I damn well will!

Screw that company as well. It only survives on handouts and ZEV credits because other manucturers can't be arsed to make enough electric cars.

The Los Angeles Times calculated that Elon Musk’s three companies, Tesla Motors, SolarCity, and SpaceX, combined have received a staggering $4.9 billion in government support over the past decade. As Kerpen noted: “Every time a Tesla is sold . . . average Americans are on the hook for at least $30,000 in federal and state subsidies” that go to wealthy Tesla owners. This is crony capitalism at its worst.

How awful that the state is subsidising a new technology that promises to produce a significant reduction in airborne pollution.

Well this is the problem. Any subsidy into any private business can be taken as being crony capitalism, even if that business is producing the elixir of life.

In the current economic setup the best we can do is choose the lesser of a million evils.

Tesla are the devil, but a cuddly one that ultimately may change the landscape. Can't see anyone else pushing us in to the next era of transport as fast as they are so I'm behind them 100% for now.

You do realise this is a grown-ups' site?

Don't worry, it's my bedtime soon and my dad said he'd be checking my posts from now on.

Really? I guess you haven't read the comments on the naked cyclist story then?

Sounds like some dodgy figure cooked up for the referendum

But it's not quite as simple as you suggest:

"The figure compiled by The Times comprises a variety of government incentives, including grants, tax breaks, factory construction, discounted loans and environmental credits that Tesla can sell. It also includes tax credits and rebates to buyers of solar panels and electric cars."

More detail here:

http://www.latimes.com/business/la-fi-hy-musk-subsidies-20150531-story.html

Certainly makes it easier to remove driving licence for life (illness, infirmity, irresponsibility, incompetence) or at least considerable periods of time if autonomous vehicles are a practical option. Personally I'd love to autopilot the 90% of long car journeys that are a chore.

Man basing large portion of his sales proposition on currently restricted technology says restriction should be lifted...

flippancy aside, I do think a calculated deployment of self drive should be done sooner rather than later. E.g for all motorways & A roads the technology can be enabled as the environment is more structured than say a housing estate road

I totally disagree. Automobiles as well as bicycles are about freedom of movement, not just transportation. Having robots deciding over the actual movement of people is morally reprehensible.

Yes, people are getting run over. The correct response to this is to increase driver responsibility and not shuffle the probem over to machines. In a civlized society, responsibility and legal liability goes hand in hand with freedom.

Autonomous cars can actually help some people to achieve freedom of movement - I'm thinking of various disabilities that should prevent someone driving. The bigger ethical problems occur when self-driving cars are everywhere and insurance becomes too expensive for human drivers, thus pricing them off the road.

Human should always have the capability and responsibility to take over if the autonomous system fails. Jumbojets mostly fly themselves but we still put pilots in the cockpit just in case.

And we've seen pilots override autopilot with disasterous consequences or either not follow the advice of software, or react wrongly to software which knows collisions are about to happen like Uberlingen.

Autopilot software isn't the same in 2016 as it was 30 years ago. It's 'smarter' than pilots as it can detect issues that humans simply cannot and calculte the best response. I think given the climate we live in with hijackings an actual occurance, it would be easy to make the case for autopilots being allowed to override pilots where they are in serious error or putting passengers at risk. This could be overseen at the Terminal for as many checks as you'd like.

It's too easy to cause devastation in a plane. The Germanwings crash is a really strong case for allowed autopilot oversight. You don't even need a pilot any more. Planes can fully take off and land without one, avoid collisions etc.

Unless you're blind, then it wouldn't be very fair on you. The failure mode of a car tends to be preferable to the failure mode of a plane. Autonomous cars can have a simple default strategy of reduce speed and stop or possibly pull into the side of the road depending on the nature of the failure. Jets would have a bit of a problem just remaining in the air where they are (never mind how the passengers would get out if the jets could just hover).

Fortunately, the proponents are counting on the insurance industry much more than government to drive the introduction of this technology. Enjoy your freedom, here's the bill.

It's fine and dandy holding the driver properly responsible once he's killed you. Personally I'd sooner be alive and replace the driver with a computer.

Ask the parents of the pupils in the French coach crash if they think a driver who can't stay awake is preferable to a machine.

Pages